搜狗已为您找到约12,131条相关结果

在线robots.txt文件生成工具 - UU在线工具

什么是 Robots.txt?如何设置?网站优化必看指南与注意事项_搜索引擎...

谷歌SEO提醒:Robots.txt 无法阻止访问|爬虫|服务器|seo|robots_网易...

学习笔记:robots.txt文件 - ScopeAstro - 博客园

Apache的robots.txt文件如何配置-编程学习网

robots.txt设置与优化_网站robots文件怎么优化-CSDN博客

- 来自:AnnaWt

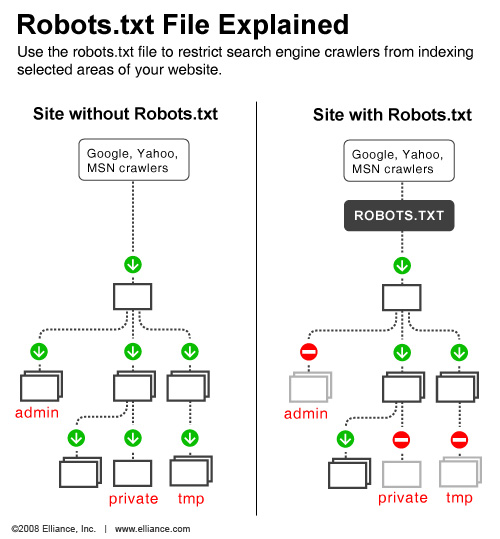

- 一、先来普及下robots.txt的概念: </p> <p>robots.txt(统一小写)是一种存放于网站根目录下的ASCII编码的文本文件,它通常告诉网络搜索引擎的漫游器(又称网络蜘蛛),此网...

Robots.txt详解-CSDN博客

- 来自:美奇开发工作室

- Robots.txt 是存放在站点根目录下的一个纯文本文件.虽然它的设置很简单,但是作用却很强大.它可以指定搜索引擎蜘蛛只抓取指定的内容,或者是禁止搜索引擎蜘蛛抓取网站的...

Google开源robots.txt解析器

Google开源robots.txt解析器